Artificial Intelligence is an incredible hot topic and all vendors are claiming to be doing something with it, no matter they build robots, servers, networks, laundry machines, and of course, storage. In this entry I am going to briefly touch some IT vendors that are doing interesting things around AI or either using it to provide better products or services.

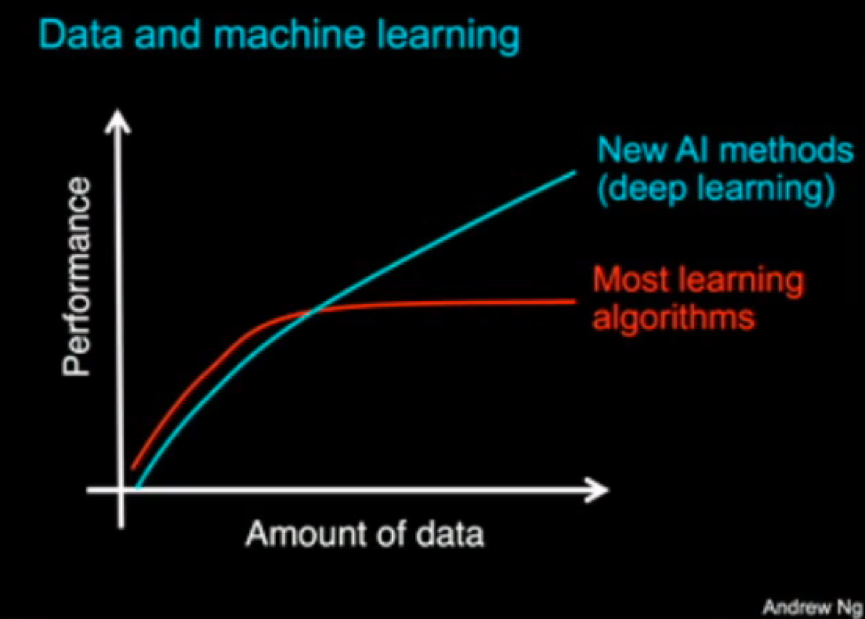

One of the key differences nowadays for AI is the amount of data available to train models and the utilization of GPUs to reduce training cycles. This has created an inflection point and is definitely kicking off AI. We are seeing how storage companies and IT services in general are bringing new products to serve the AI, Machine Learning and Deep Learning use cases. Also, the huge amount of data generated by their systems is being used to provide better recommendations, simplify utilization and reduce costs.

Pure Storage has created systems like FlashBlade from the ground up with a design to feed the most demanding AI algorithms. We saw in the latest Big Data 2017 conference in Madrid how they are partnering with NVIDIA, which provides the GPUs that are being used nowadays to dramatically speedup the training processes. Those GPUs will be hungry for data and the partnership with Pure Storage sounds very logical, as they can serve both large files in a sequential mode as the same time than random data with very high concurrency. One complicated step in AI is feeding the data pipeline, so the use of flash is a very logic choice when building infrastructure to serve these new workloads. Pure Storage claims how FlashBlade has reduced by almost two-thirds the time needed to sequence and analyze data-intensive DNA samples at the RISELab at the University of California-Berkeley.

Pure Storage was designed from scratch to upload all sensor information from their storage systems to the company corporate servers. In my opinion that was a key and smart decision. This now provide unique information to Pure Storage to make informed decisions and predictive support. They call it “Intelligent, Self-driving Storage” and it is based on Pure1 Meta. They key thing is that they have data that can be used to train AI algorithms. The company claims collecting over 1 trillion data points per day from their customers systems. Data is the base for any AI or ML system. With that amount of data, they can easily predict replacement times, workload predictions, usage patterns, etc. Data is the lifeblood of any AI system.

Pure Storage is a clear example of company not only facilitating bringing AI to the next level but also using AI internally to provide value to their customers.

ServiceNow, a cloud based company that helps customers to automate processes, collect a huge amount of data from servers running critical workload and offer services to proactively detect and prevent service outages. ServiceNow strategically acquired DxContinuum who specialized in Machine Learning algorithms. Therefore, ServiceNow has a key ingredient to build any AI system: data. With the right algorithms, they can make a very good use of that data and provide better services to their customers.

One key concept for those companies is taking advantage of is the immense amount of data that is being generated. Digest that information by humans is quite difficult, and predict any failure or outage is complex. By using AI, those companies are helping humans to make better informed decisions. The combination of AI analyzing the huge amount of data and providing recommendations to a system administrator, that at the end will take the final decision to replace a soon-to-fail disk, or upgrade a database system, will help by reducing risk and increasing the operational efficiency.

Not all companies serving data centers will have the capability to leverage AI with their products. Companies with silos in data centers will not be able to collect the amount of data needed to effectively train systems.

Hitachi Data Systems, recently merged with Vantara and now target uses cases on IoT, data center, cloud and analytics. The big difference for the new company will be the amount of data they are collecting and how they will make a good use of it.

Nutanix also announced in their .NEXT conference that will be providing storage not only for VMs but also for Containers and for Edges, and run a demo where sensors were able to identify the number of passengers on the airport queue.

Veritas Technologies showed a demo in their last VISION conference for their new solution Veritas Cloud Storage that introduces an integrated cognitive engine. In that demo a camera was taking people pictures and providing automated tagging that could be searched later on.

AI is going to be a key technology on IT, not just IT serving AI (more capacity, faster storage, more GPU, more memory), but AI to serve IT to make it more efficient. Therefore, we can see how machines will become more intelligent to make a better use of their resources and take care of themselves!

Again, the amount of data available, and how fast that data can be provided to the GPUs that will be training the decision systems based on deep neural networks will be critical to differentiate one companies form others. As Andrew Ng. states, the performance for new deep learning methods (based on convolutional networks) dramatically increased based on the amount of data available.

The topic is really hot and AI is a reality nowadays. I will be exploring all this initiatives in details in the following entries.

Carlos Carrero.-