There is a clear consensus in the fact that data is the new oil. We have seen how the increase in data volume produces better results and has a clear impact in Machine Learning models. Data Engineers spend a good amount of time collecting and preparing data for Data Scientists so they can build better models. In order to get all the value from that new oil, we have to be able to extract it from very different sources and put it to work in our models. Data Scientists may spend too much time selecting what features will give the best result for their models and getting access to data from different sources sometimes is not an easy task.

Snowflake Data Cloud Platform makes things much easier for Data Scientists as it creates a single source of truth with all data available in a very easy way to consume and use it in the training of Machine Learning models. Here I am discussing an example for the well known Citibike demo. You can experience the 30-day free trial from Snowflake and try it yourself.

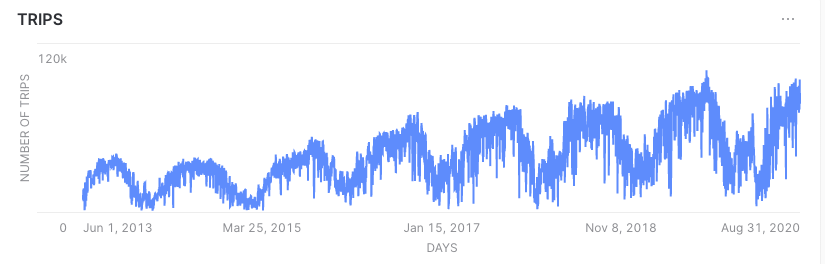

Citibike provides all info about their trips in csv format. With Snowflake, we can get those files that provide structured data and get a table with all that info. Once the data is within Snowflake I can start asking questions and for example get a view of the number of trips per day. With Snowsight I can also get a graphical representation of my data. We can see how there is some seasonality in the number of trips during the year:

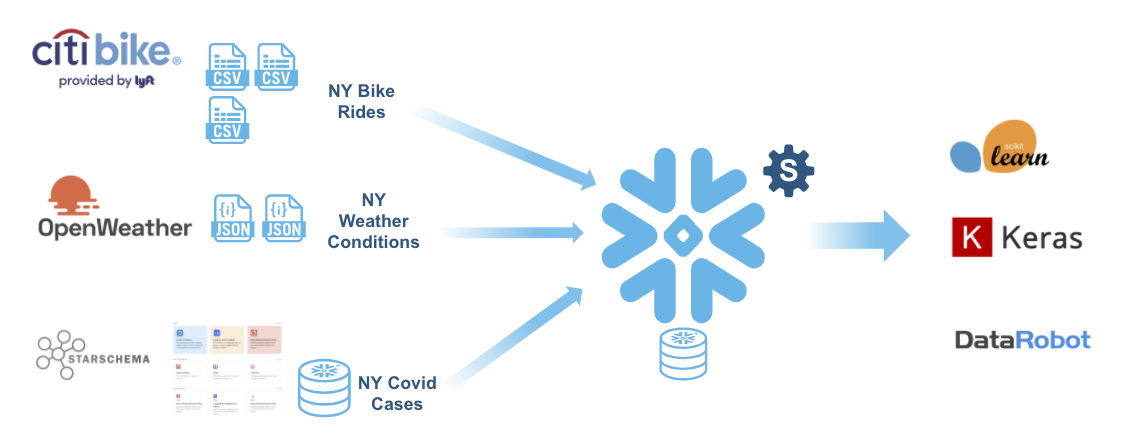

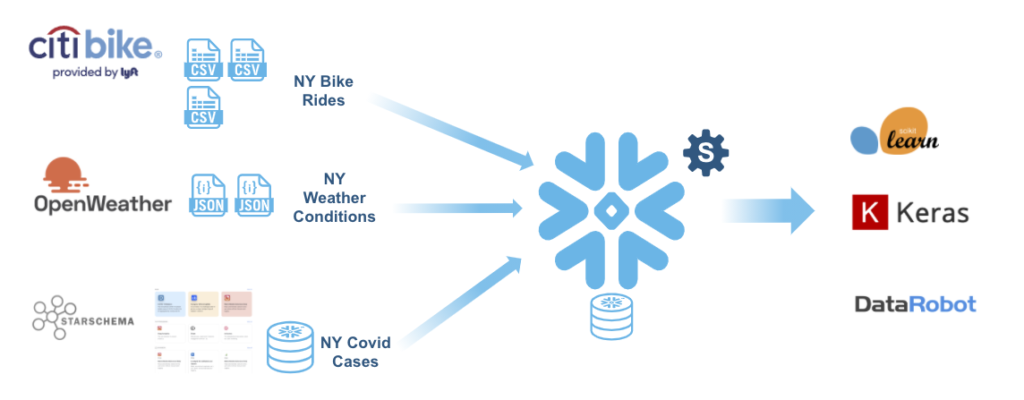

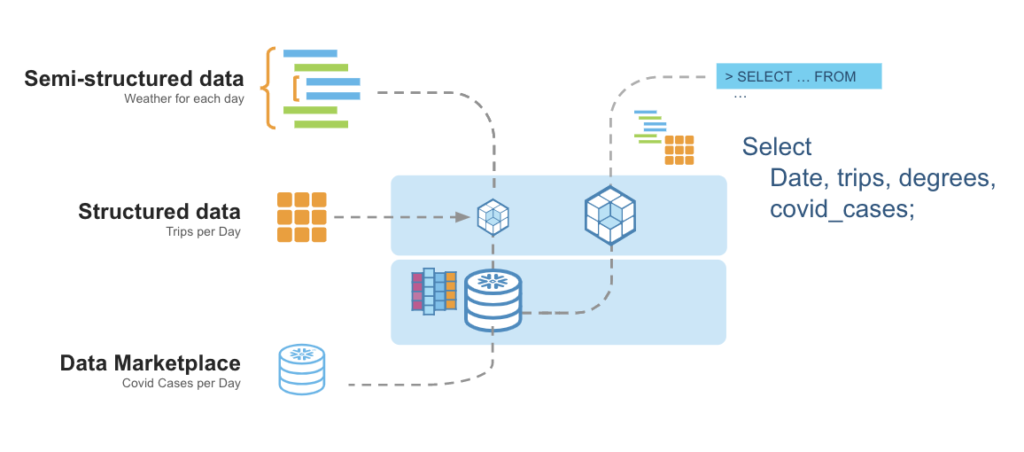

Using different Machine Learning models I want to see how I can predict the number of trips in a future time and how precise my model can be. My goal will be to see if using more features I can get better precision. In order to get those features I will use Snowflake Cloud Data Platform as a single source of truth and its capabilities to get data from CSV files from Citibike, get Weather data that is provided in JSON format and join it with the number of covid cases per day provided in the Data Marketplace by StarSchema. All this data will be used to train ML models using scikit-learn and Keras in Python using Jupyter Labs. Later I will also use DataRobot capabilities.

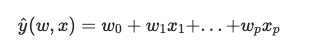

First I will fit a classic LinearRegression linear model using scikit-learn. The target value is a linear combination of the features multiplied by weights for each one. As a first approach I will get only the year, month and day as features, and the number of trips will always be the value I want to predict.

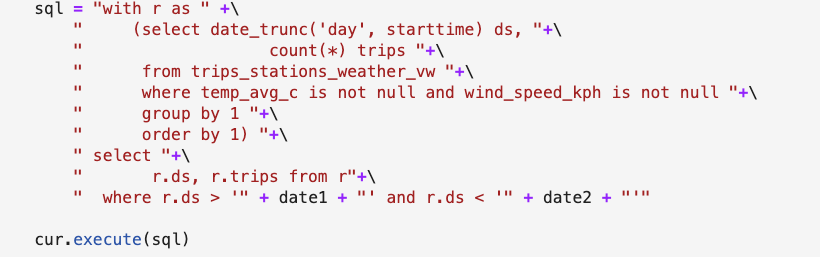

Getting the values to train my model is just running a standard SQL query against Snowflake Cloud Data Platform selecting the features I want to use. The view trips_stations_weather_vw provides both trips and weather information and it is a join form a table for trips with another table with JSON content with weather information:

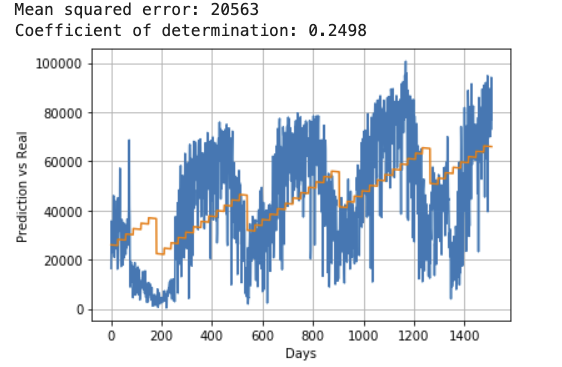

As I expected, my first result is not very good. The Root Mean Squared Error (RMSE) I got for my training data is 20563 trips:

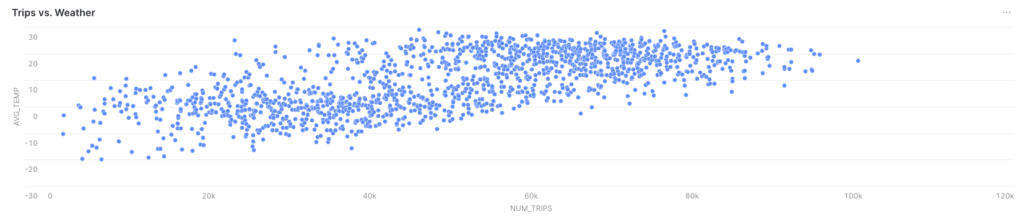

During my preview of the data using Snowsight I detected that weather conditions may influence the desire to use Citibike services:

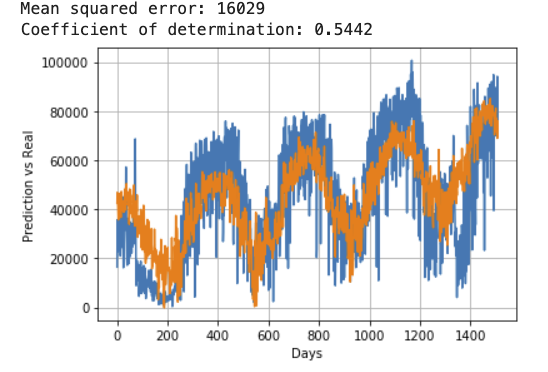

People are less willing to use bikes on a cold day. Using Snowflake Cloud Data Platform as a single source of truth, I can get weather values from OpenWeather. These are coming in JSON format, but that is not a problem. I can just copy the JSON files to Snowflake and leave it in that format using the variant data type. On top of that, I create a view that gets data from my trips table and also from my JSON data. Using that view I just select the average temperature for the day as a new feature for my model. As I can see, it produces better results than the previous one:

Predictions are closer to training data and RMSE has been reduced a bit.

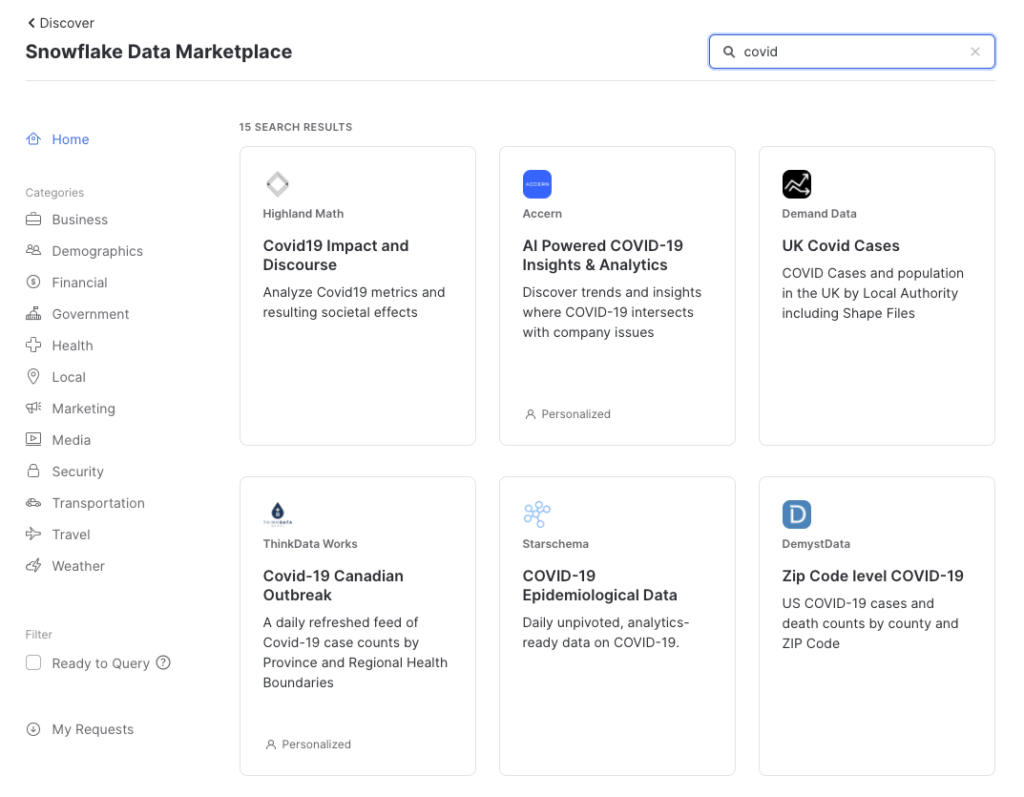

The world has been disrupted during 2020 because Covid-19. It has affected our lives and our business. In the graph for trips per day, we can see a clear unusual drop during March that has changed the tendency of previous years. Therefore, trying to add some data about Covid-19 may be a good idea. Again, Snowflake Cloud Data Platform makes this quite easy and helps to accelerate the Time to Value of our models. Using the Data Marketplace we can get access to many different kinds of data that will augment our models. There is no need to copy or import data. No need to generate duplicates or transfer data between different systems.

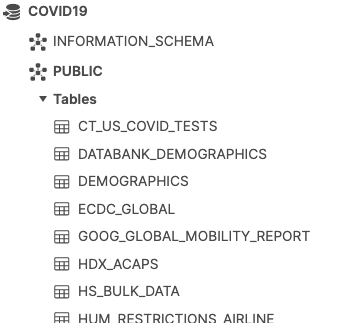

When we do it, we will see the database in our Snowflake account. Data has not been copied. It it just there accessible for us. We can create views, joins etc. In my case, looking for Covid-19 data, I found StarSchema that provides daily updates about covid cases. Here is the database and some of their tables.

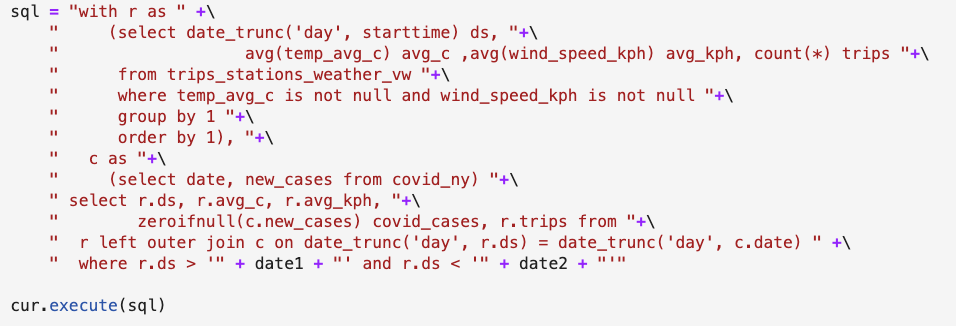

So I simply got the covid cases for NY City per day in a view that I can join with my previous query.

The table covid_ny has been generated with the specific info of cases for NY City. I can join that table with my previous view and get all the features I am looking for. Each time that query is executed I run a join between a table that was generated with CSV data, with JSON format, and with a database that is provided by a different company.

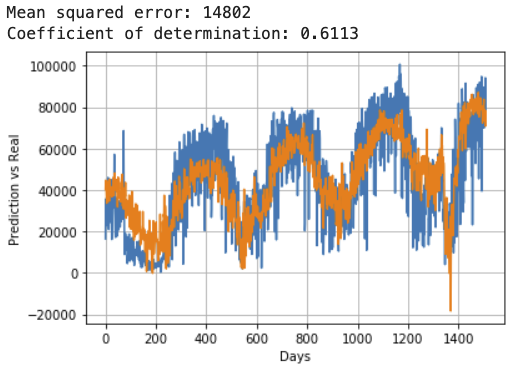

My RMSE has been reduced once I added this new feature, although there is a strange drop along with the pike on number of covid cases that affects the model. My RMSE is now 14802:

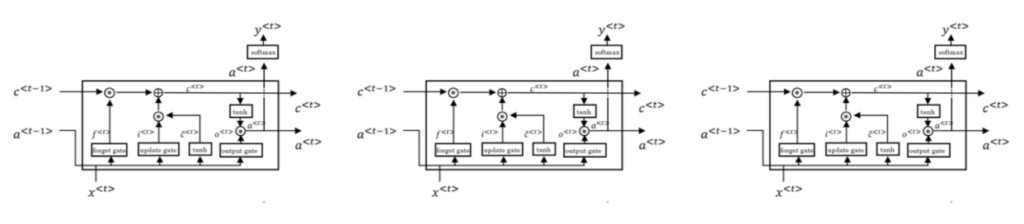

Linear Regression model is something I wanted to try, but it is not the best thing for a time series as we have here. Therefore, I wanted to try the same experiment but this time using Keras to build a Long Short Term Memory Recurrent Neural Network (LSTM). This link provides a very good example. This is a better approach as one of the inputs for the cell is the output of the previous cell at the same time we can input more features. This is a graphic for LSTM from one of the very good Andrew Ng. courses:

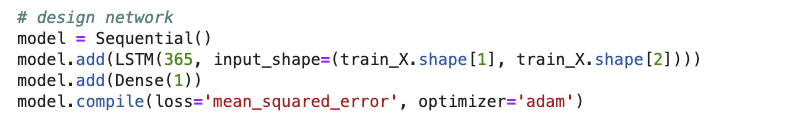

Keras makes the LSTM implementation easy and I can reuse the queries I had previously created to extract the values for my features from my single source of truth.

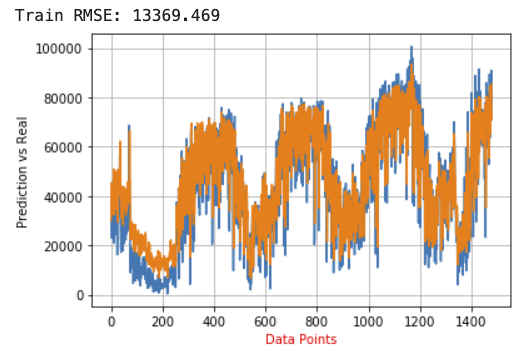

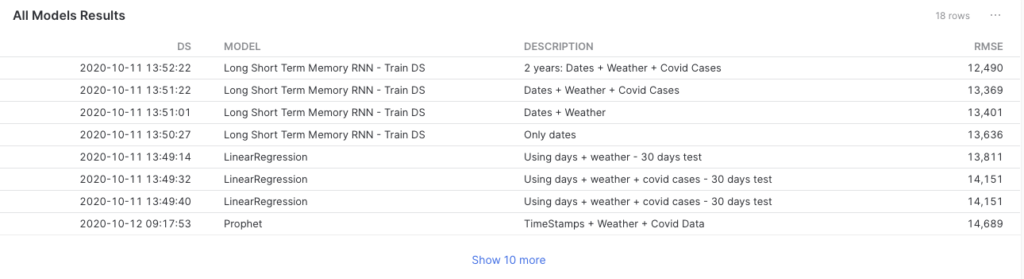

Using a Sequential model my results are better and I can see how I have a better fit with a reduced RSME for the case where I combine time, weather and covid cases:

My new model has reduced the RMSE down to 13369.

Here again I have seen how adding data from weather and covid cases has produced an improvement in my results, although the Sequential model works better in any of the cases.

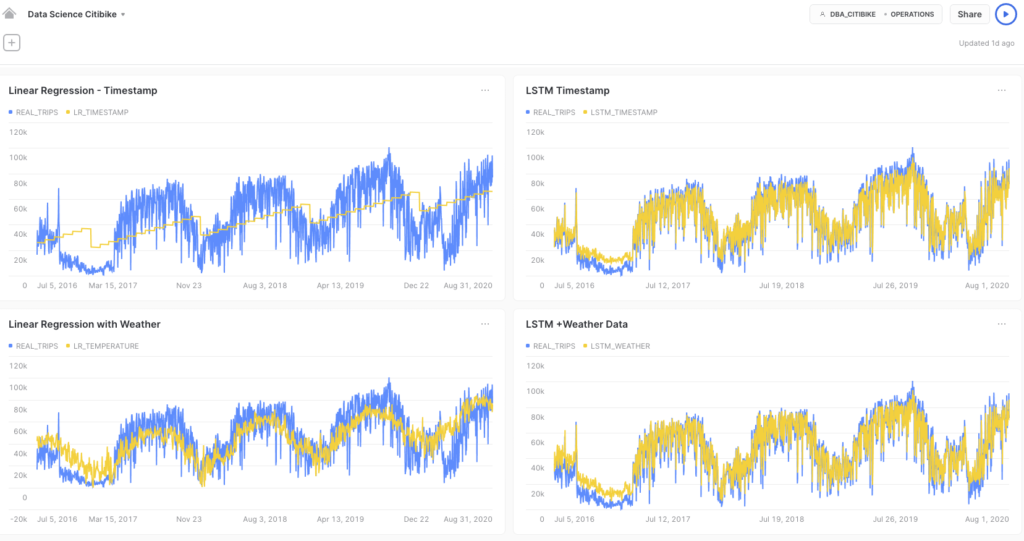

Another advantage of using Snowflake is that I can write back all my results to Snowflake and this can be used with different dashboards where I can see the evolution of all my models. My Jupyter notebooks are great, but this simplifies having a view for all my models. Here I am using Snowsight to get my dashboards:

I also added the Prophet model provided by Facebook to test my assumptions that weather and covid cases will improve my model. When only using trips data, the RMSE was 18287 while adding weather and covid cases it was reduced down to 14688. Each time my models were created, the results were automatically stored back in Snowflake and now I can review all of them in a simple view:

One way to drastically improve the Time to Value for any data scientist problem is using any of the Technology Partners around AI for Snowflake. In my case I wanted to give a try to DataRobot. Test it is as easy as login into Snowflake, click on Partners and select DataRobot.

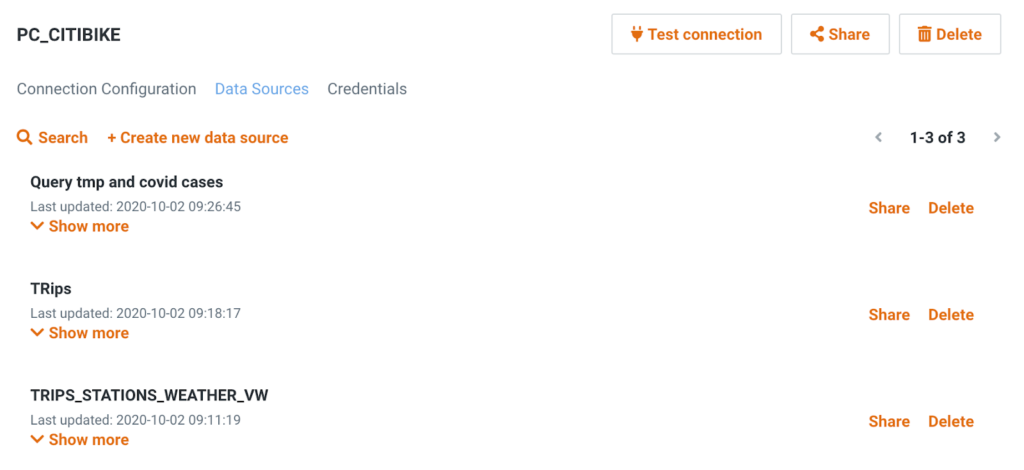

An account will be automatically created and a connection between DataRobot and Snowflake. From there select your data sources:

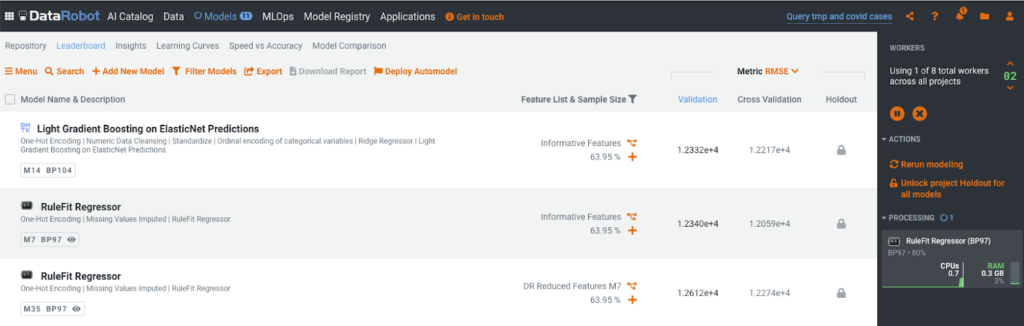

From that point, just select the data sources and start building your models. Here is a screenshot for the different models tested with just a few clicks. We can see how they improve my results. While I just spent a few minutes with DataRobot, I had to spend a good amount of time building and testing my own models. The Time to Value is clearly reduced and the integration with Snowflake is quite simple:

As a summary, we have seen how using Snowflake Data Cloud Platform enables companies to use data from different sources that will generate better business outcomes. It was easy to combine data from three different sources to improve my ML models providing better predictions. Finally, the use of Technology Partners around Snowflake will provide better models and insights and will reduce the time you spend building models.

Carlos Carrero.-